Introduction

Localisation is a central problem in robotics and it is very relevant to the AVP project. A self-driving car that is looking for an empty parking slot must know where it is on a map. For a precise manoeuvre, such as parking, an equally precise map and localisation algorithm are required.

The AVP project also has to respect a realistic budget for sensors, which rules out LiDARs in favour of cameras and IMUs. For this reason the project is committed to develop a vision-based localisation solution that uses HD Maps. Vision-based localisation however is very difficult and no one has yet demonstrated a system that works accurately and robustly in a fully general environment.

Within the International Standards Organisation, Technical Committee 204, Working Group 14, Parkopedia is part of a drafting team that is developing a standard for Automated Valet Parking Systems. The drafting team has agreed on the requirement for Artificial Landmarks, i.e. fiducial markers to be manually positioned in a carpark to enable accurate, robust localisation. At minimum, artificial landmarks are necessary around the pick-up and drop-off and zones to initialise the localisation system of a vehicle equipped with AVPS.

The next section will give an overview of localisation with landmarks.

Background to localisation with landmarks

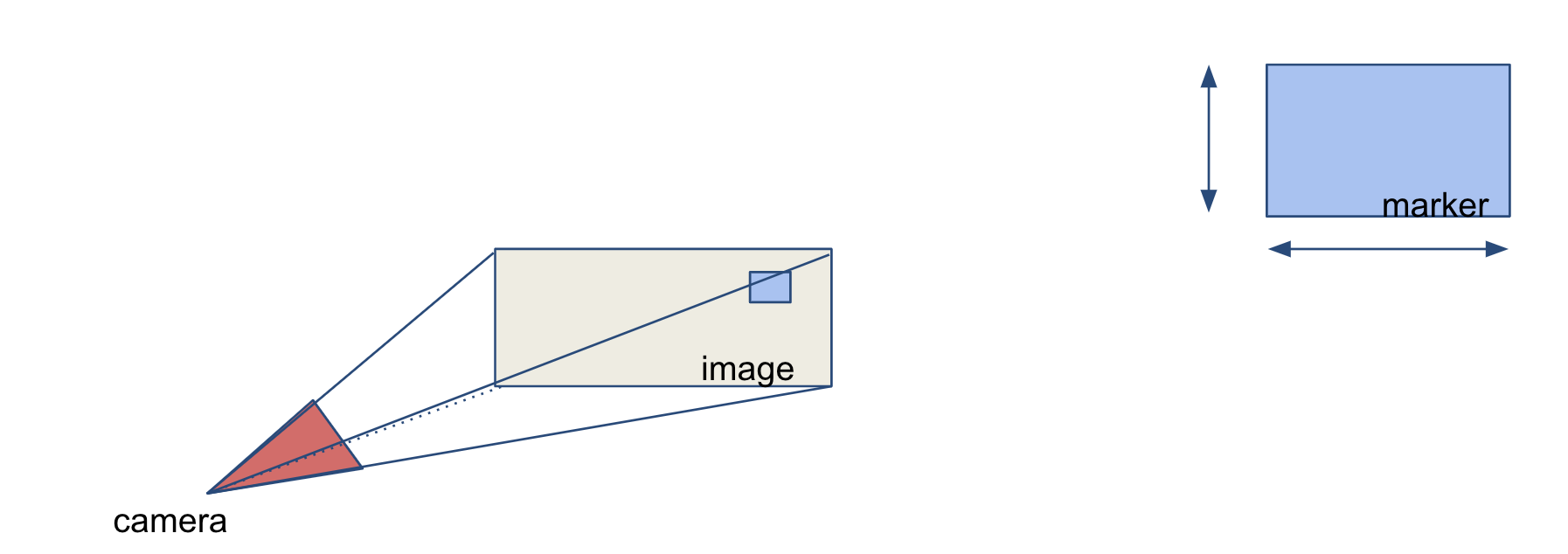

The first step of localisation with landmarks consists of detecting the landmark with the available sensors. In this example we are using a camera, so we need to find the pixel coordinates of a landmark in an image. Note that we use interchangeably the terms landmark and marker.

The second step is to estimate the sensor position with respect to the landmark. In the case of a calibrated camera and of a marker with known size, a single image is sufficient to estimate the rigid transformation between the camera and the marker. The algorithm used is a variation of Perspective-n-Point.

The third and last step is to estimate the pose of the camera in map frame. We know the position of the camera with respect to the marker from step 2. Provided that the marker is distinctively identifiable, we can find its position in the map. By chaining the two transformations, we obtain the desired pose of the camera in map frame.

Artificial Landmarks

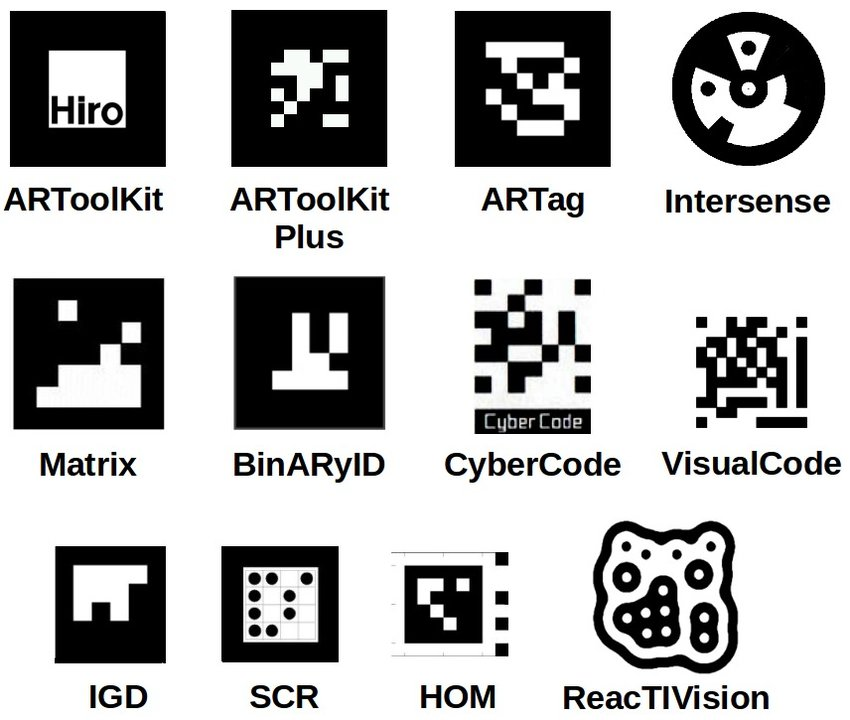

There are many designs for artificial landmarks in literature.

Given the localisation process outlined in the previous section, we know some requirements for a good landmark. It must be easy to detect to facilitate the first step. It must be distinctive enough to be told apart from the other landmarks. And finally it has to be of a size and shape easy to handle in practice.

There is a convergence to a black and white square shape marker because its properties:

- High contrast

- Simple geometry

- Easy to encode information

High contrast and square shape are clearly useful in detection because they can be exploited by established computer vision techniques. For example thresholding or line detection.

The information encoding part has more degrees of freedom that can be used differently, keeping in mind the goal of having highly distinguishable landmarks.

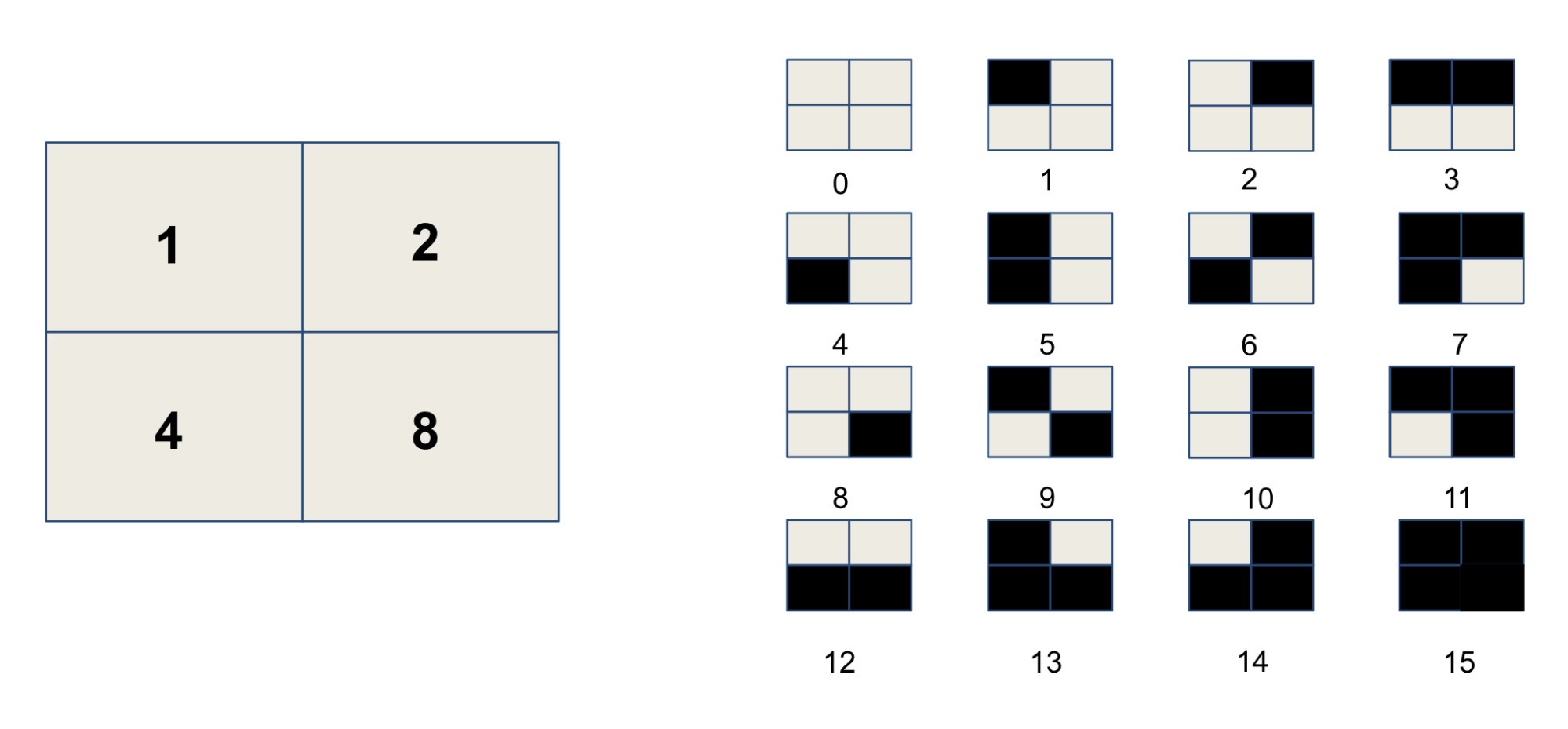

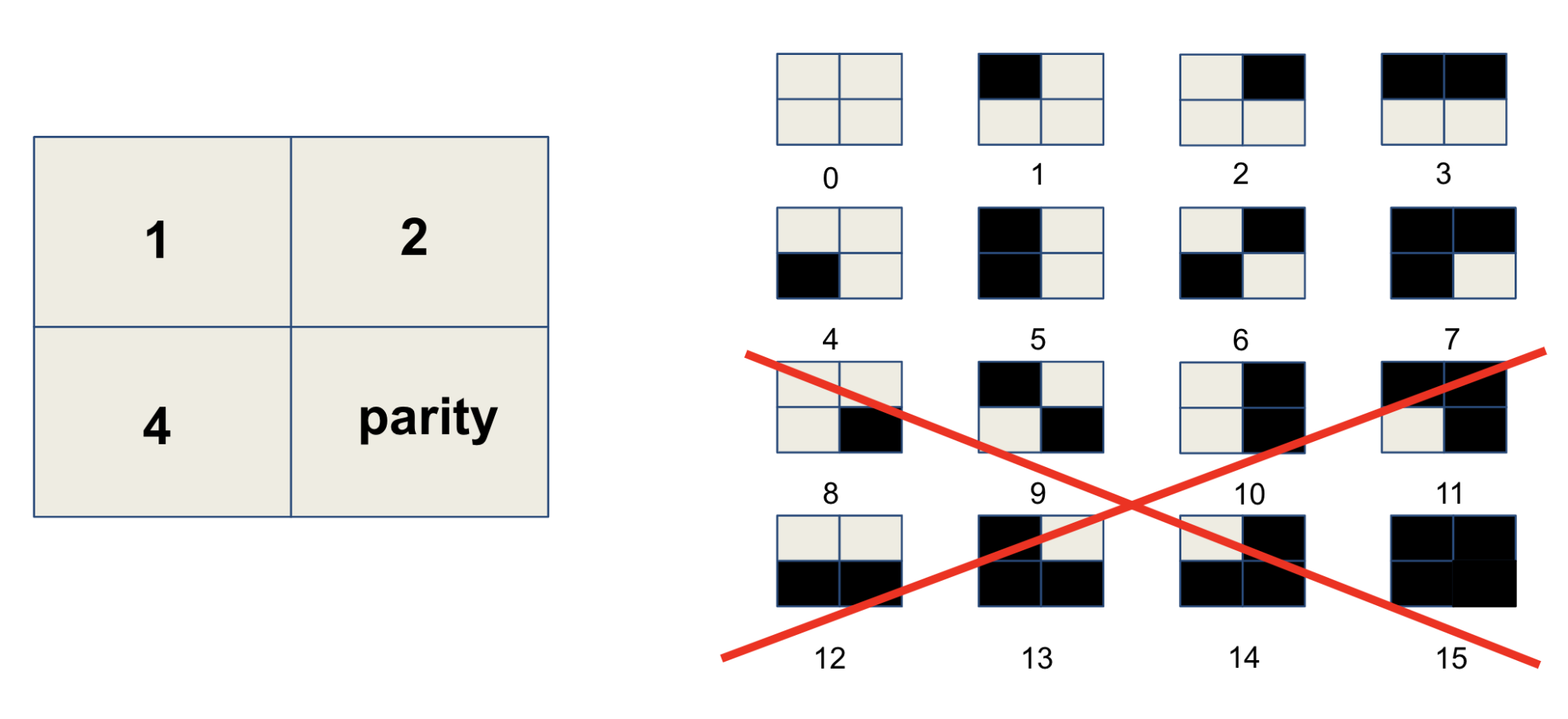

The basis of all the approaches is to subdivide the marker in a grid of small squares and use a binary encoding. That is to assign a power of two to each square, that is selected or not based on whether the square is black of white. By using a 2×2 grid we can represent 16 values as shown in the image below.

If we were to use all the possible markers for a given grid size, we would have the problem of erroneous detections. Some markers are very similar and minor occlusions or image noise could confuse the detection process. There are mainly two ways to deal with this problem, both of them based on the idea of sacrificing some information to achieve greater safety.

The first of these strategies is to set some squares to error detection. These squares convey no information on the id of the marker, but act as a necessary condition for correctness. The use of parity bits is widely studied in information theory and different schemes are available with different properties.

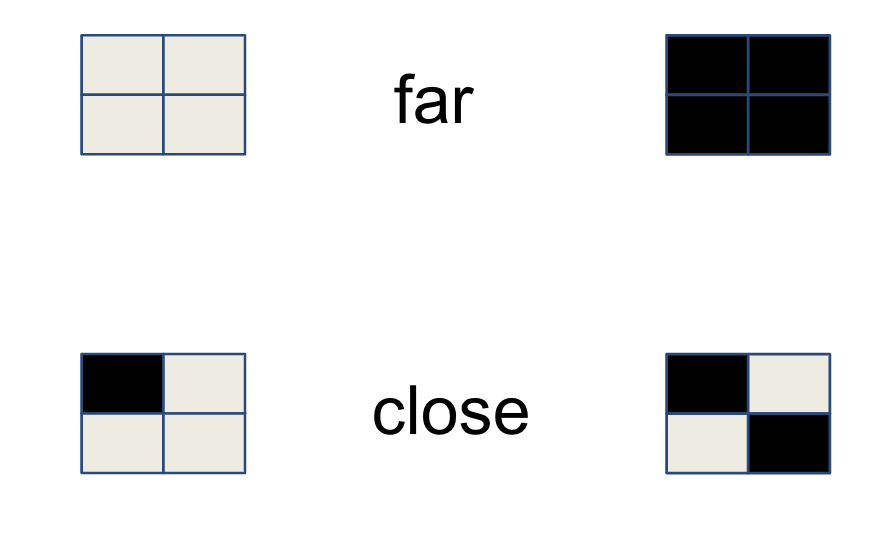

The second strategy is to maximize entropy, that is the distance between markers. Intuitively two markers with no square in common are far, while a marker is maximally close to himself. This notion is formalized by the concept of Hamming distance, which is also a widely studied topic in information theory.

The next two sections will analyze two marker types: ArUco and the standard proposed by the ISO drafting team.

ArUco markers

ArUco markers are a state of the art fiducial marker system explicitly designed for localisation.

The information encoding is designed for optimal intramarker distance. Possible markers are iteratively sampled and only the ones with a sufficiently large Hamming distance are selected.

ArUco is very flexible as it provides multiple dictionaries with different sizes. The authors of the paper provide a production grade implementation in OpenCV that also has Artificial Reality capabilities, very useful for debugging.

The following code snippet is an example of the use of a the Aruco library for localisation.

// Retrieve image of environment with Aruco markercv::Mat input_image = /* retrieve image from camera */;// Initialise predefined dictionary DICT_4X4_250auto dictionary = cv::aruco::getPredefinedDictionary(DICT_4X4_250);// Initialize MarkerVector struct, output parameter of the detection function MarkerVector markers;// Detect markers in the imagecv::aruco::detectMarkers(input_image, dictionary, markers.corners, markers.ids);// Initialize camera intrinsicscv::Mat K = /* camera matrix */;cv::Mat D = /* distortion coefficients */;// Set marker sizefloat marker_size = /* marker size including black border */// Estimate marker posecv::aruco::estimatePoseSingleMarkers(markers.corners, marker_size, K, D,markers.rotations, markers.translations);

We provide a downloadable with the PDF version of the ArUco dictionary DICT_4X4_250. An A2 version, more suitable for printing, is also provided for convenience. It is common to print the markers on waterproof PVC and mount them on 3mm plastic or aluminum.

ISO markers

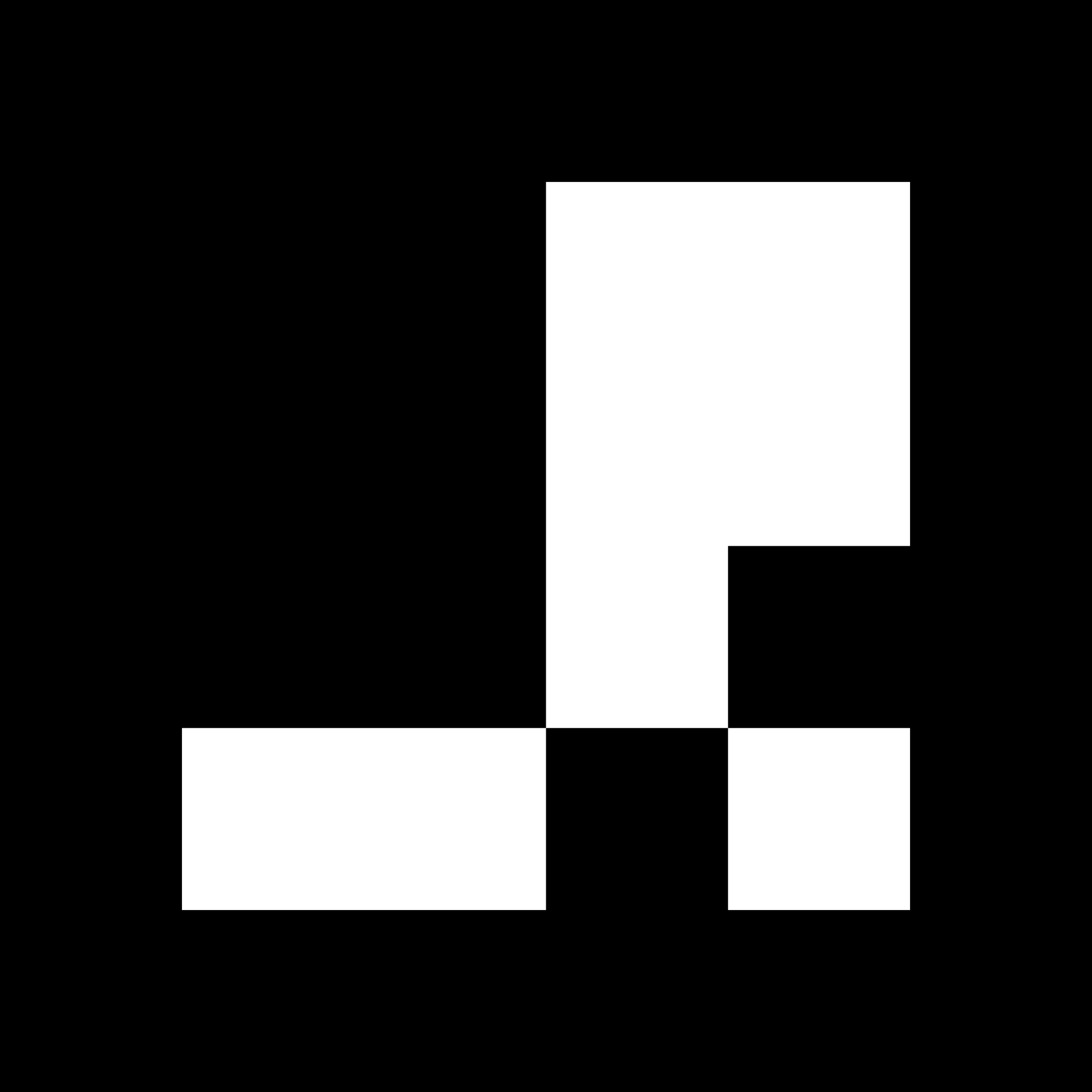

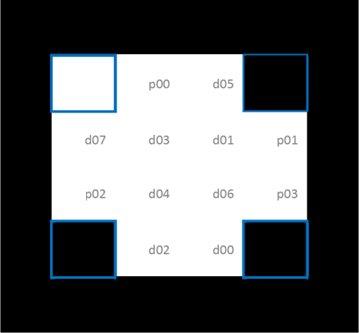

The AVPS drafting team has chosen to use a custom definition for artificial landmarks that explicitly encodes the orientation, data bits and parity bits for error checking. This encoding can be seen in the image below. Rotation is encoded through the four corner squares, with the top left white and remaining 3 black. With the orientation fixed, 8 data and 4 parity bits are encoded with the remaining 12 bits to create a Hamming Code.

It is possible to create custom dictionaries in OpenCV to use with the ArUco library. We have encoded the ISO dictionary as a custom one in a single header file which you can just include in your software.

Only one line of code from the previous example has to change – the creation of the dictionary – then the same code can be used to detect ISO markers.

auto dictionary = cv::makePtr<cv::aruco::Dictionary>(iso::generateISODictionary());

By using the library in this straightforward way, we see a lot of false positives, because we are not using the error correcting properties of the Hamming codes. A first step to improve the situation is to set the detector parameter errorCorrectionRate to zero, to disable the default correction done by ArUco. A better solution, that uses the full potential of the Hamming codes, requires to modify the ArUco detection algorithm.

As in the case of ArUco, we provide PDFs of the ISO dictionary as 30×30 cm squares and in A2 format.

Conclusion

A reasonable question to be asking right now is whether all this information above is even necessary. Can we not enable localisation without artificial landmarks?

It turns out that this is a difficult problem and industry is looking for a solution. The University of Surrey is developing a vision-based localisation algorithm that avoids the use of Artificial Landmarks as part of the AVP project and we look forward to demonstrate this technology on the AVP StreetDrone.

Expect to see a StreetDrone parking itself autonomously soon!

Recent Comments